Introduction

A/B testing within Dynamics 365 Customer Insights - Journeys offers a robust mechanism for assessing and refining your marketing strategies. This tutorial will guide you through the process of setting up A/B tests for segment-based journeys, enabling you to effectively gauge the impact of different variations on audience engagement. Let's first explore what A/B testing is in general.

What Is A/B Testing in Marketing?

A/B testing is a valuable and data-driven approach in marketing that allows businesses to optimize their digital assets by comparing two different variants and identifying the one that performs better. This process, often applied to webpages, emails, advertisements, popups, customer journeys, or landing pages, involves presenting different variants to users and utilizing statistical analysis to conclude their performance.

Key Advantages of A/B Testing in Dynamics 365 Customer Insights - Journeys:

• Enhanced Conversion Rates: By pinpointing the most effective content elements, A/B testing improves conversion rates, ensuring marketing efforts drive desired actions.

• Efficient Resource Allocation: Allocate resources more efficiently by focusing on proven strategies, saving time and costs associated with less effective marketing approaches.

• Continuous Campaign Improvement: A/B testing facilitates iterative optimization, allowing marketers to refine and enhance their campaigns for sustained effectiveness continually.

• Data-Driven Optimization: A/B testing provides concrete data, enabling marketers to optimize email designs based on actual audience responses fostering informed decision-making.

• Automated Decision-Making: Facilitates automated processes for smooth implementation of successful designs, streamlined workflows, and quick integration of effective strategies.

Prerequisites

Before initiating an A/B test within a customer journey, ensure the following:

• Email Designs: Have at least two different email designs ready for testing. Label each design as either version A or version B.

• Target Segment: Identify the target segment for your A/B test within the customer journey. Ensure that the segment has a sufficient number of contacts to produce meaningful results.

Video

Step-by-Step Guide to Run A/B Testing

1. Navigate to Real-time Journey:

• In Dynamics 365 Customer Insights - Journeys, navigate to Real-time Journey > Engagement > Journeys. Select an existing Segment-Based journey or create a new one.

2. Adding an Email Tile:

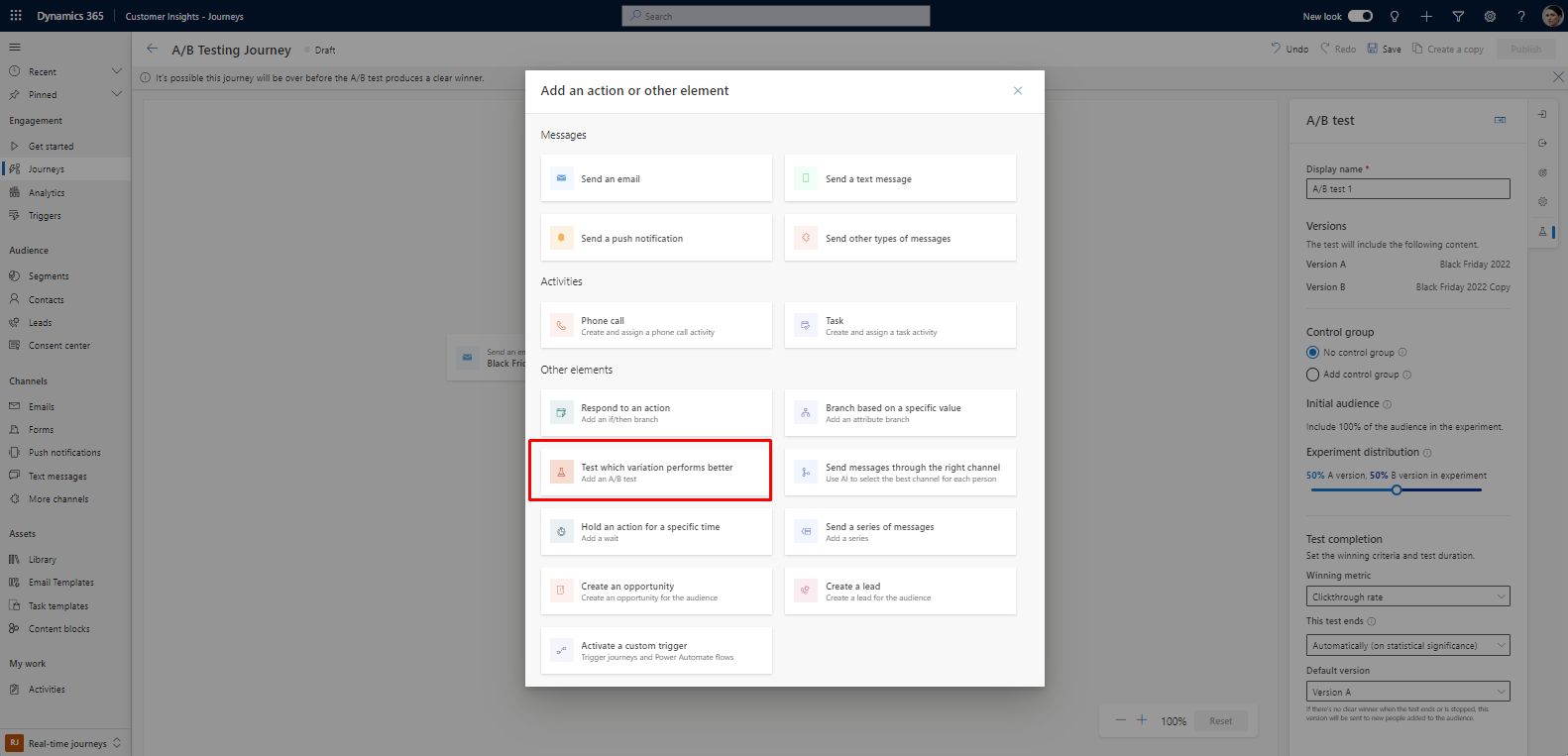

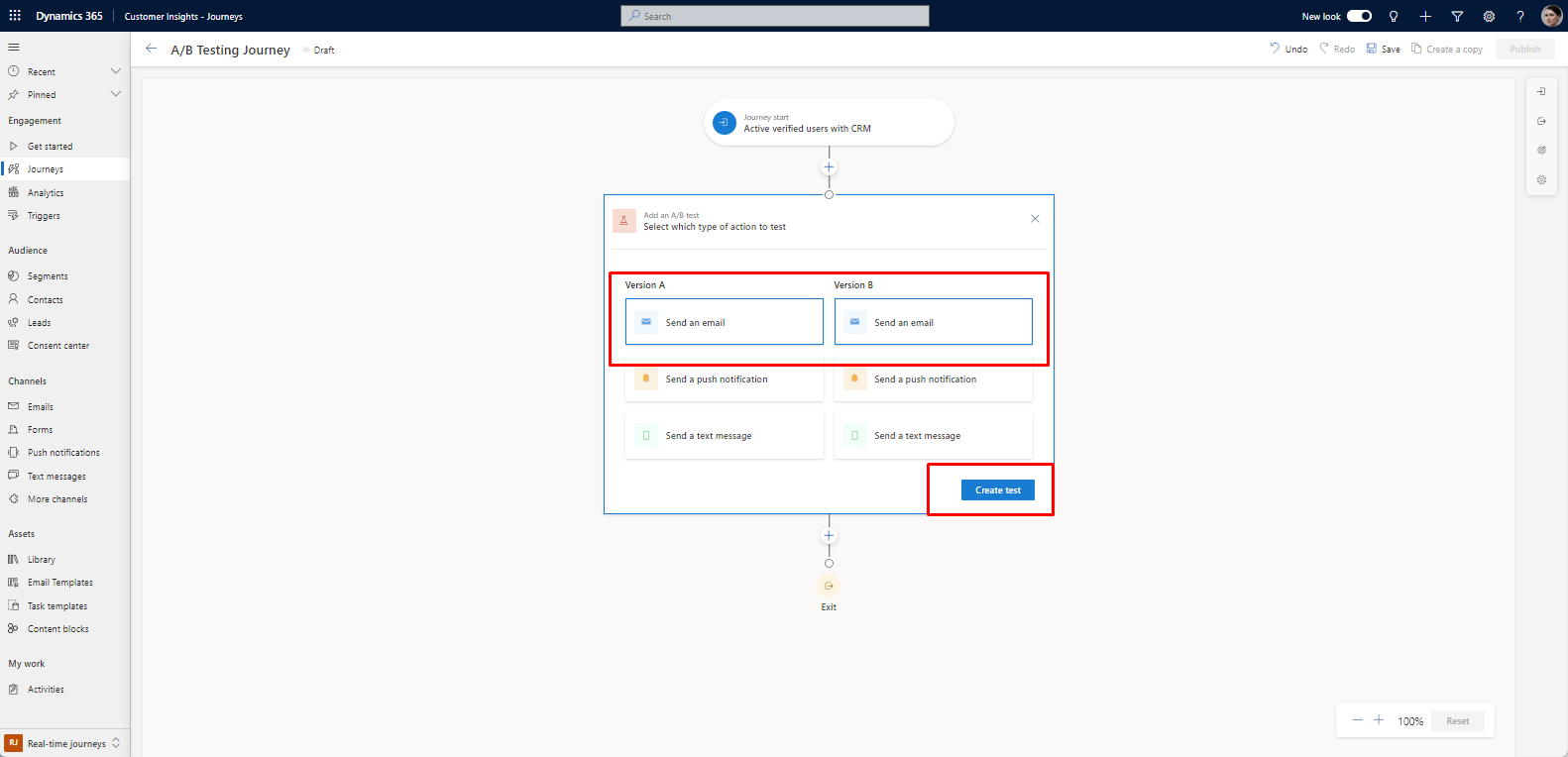

• Within the journey builder, incorporate an email tile for A/B testing. Click on the plus sign (+), and choose 'Test which variation performs better.'

• Select the channels you wish to test: email, push notification, and text message. Then, click the 'Create Test' button.

3. Configuring A/B Test:

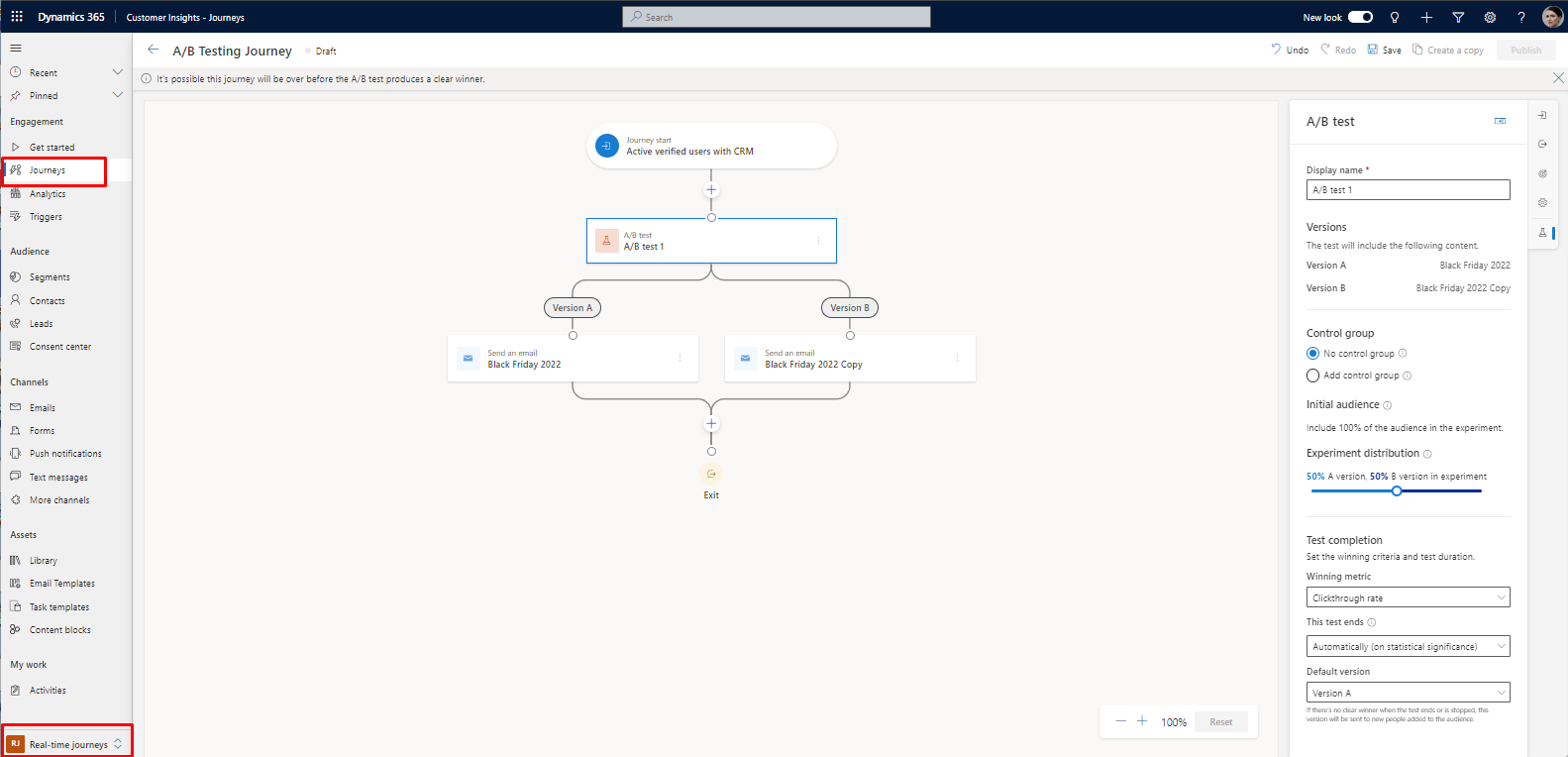

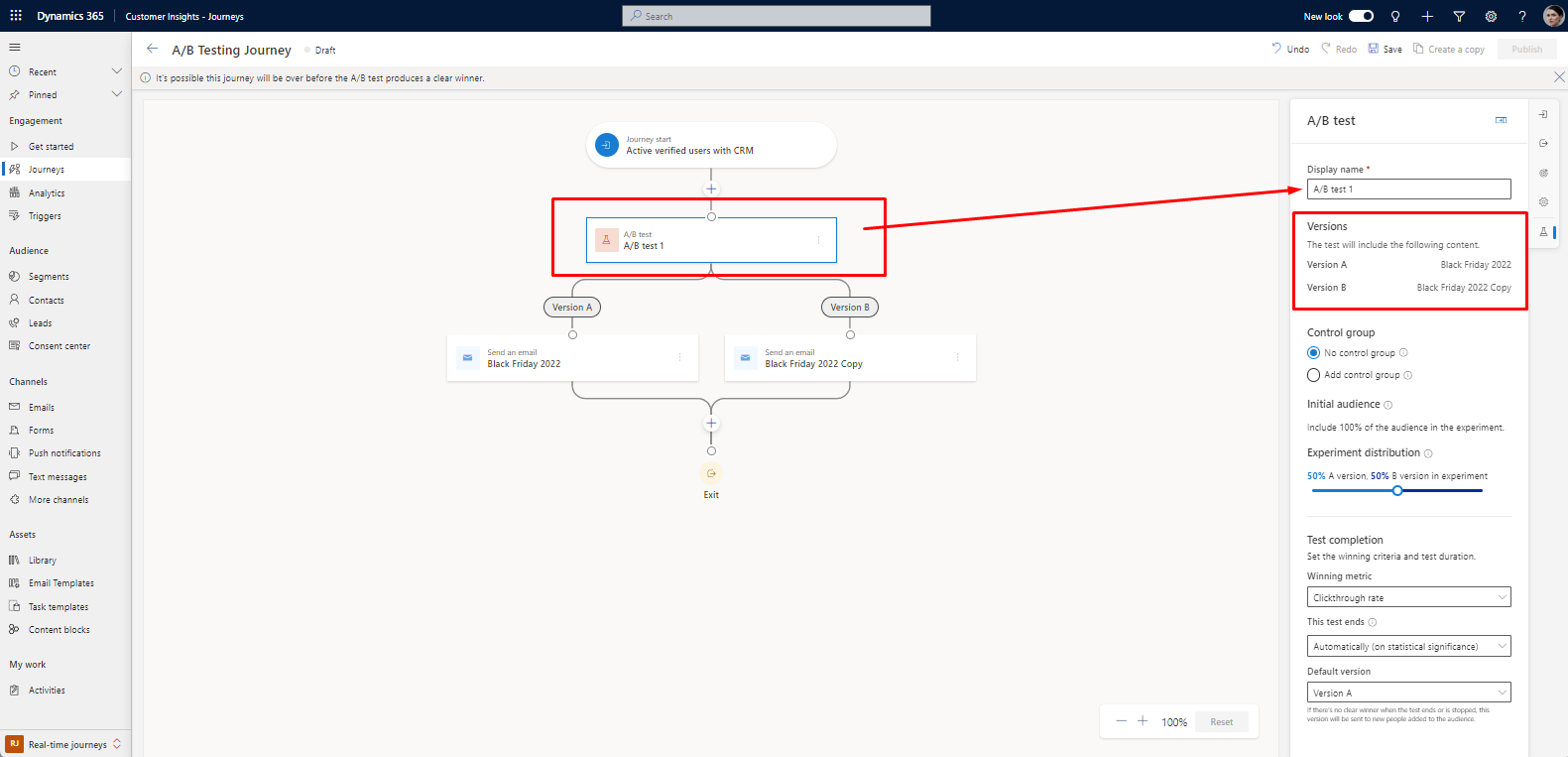

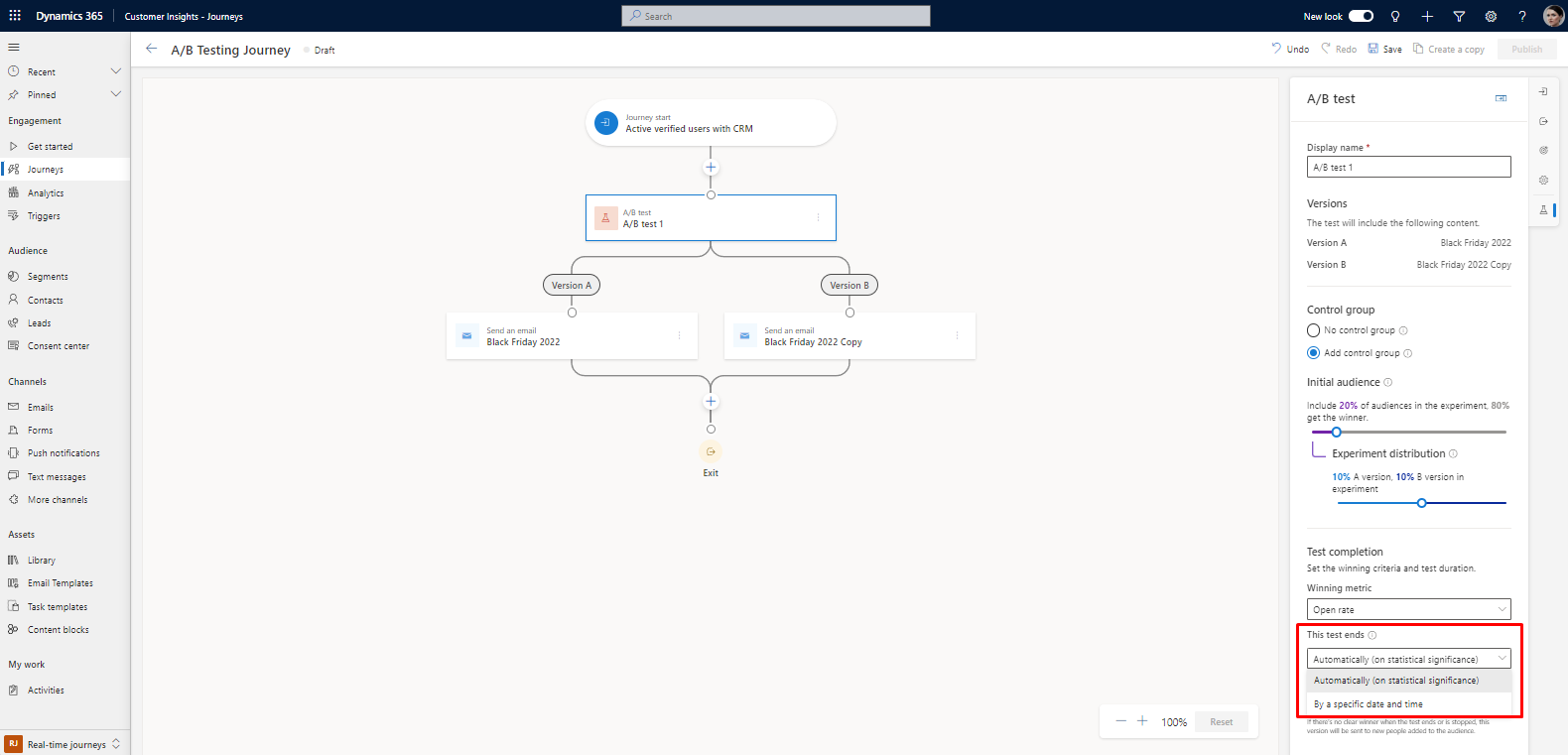

When selecting the A/B test tile, a side panel will open, presenting various parameters:

• Display Name: Provide a unique name for your test, which will be visible in both the A/B test panel and customer journey analytics.

• Versions: Select the content for the channel (e.g., two emails) from a dropdown list in the journey builder or the side panel.

• Initial Audience: Select the audience group to be excluded from the A/B test before obtaining results. This option is exclusive to segment-based journeys where the total number of customers is known. You can create two types of A/B tests.

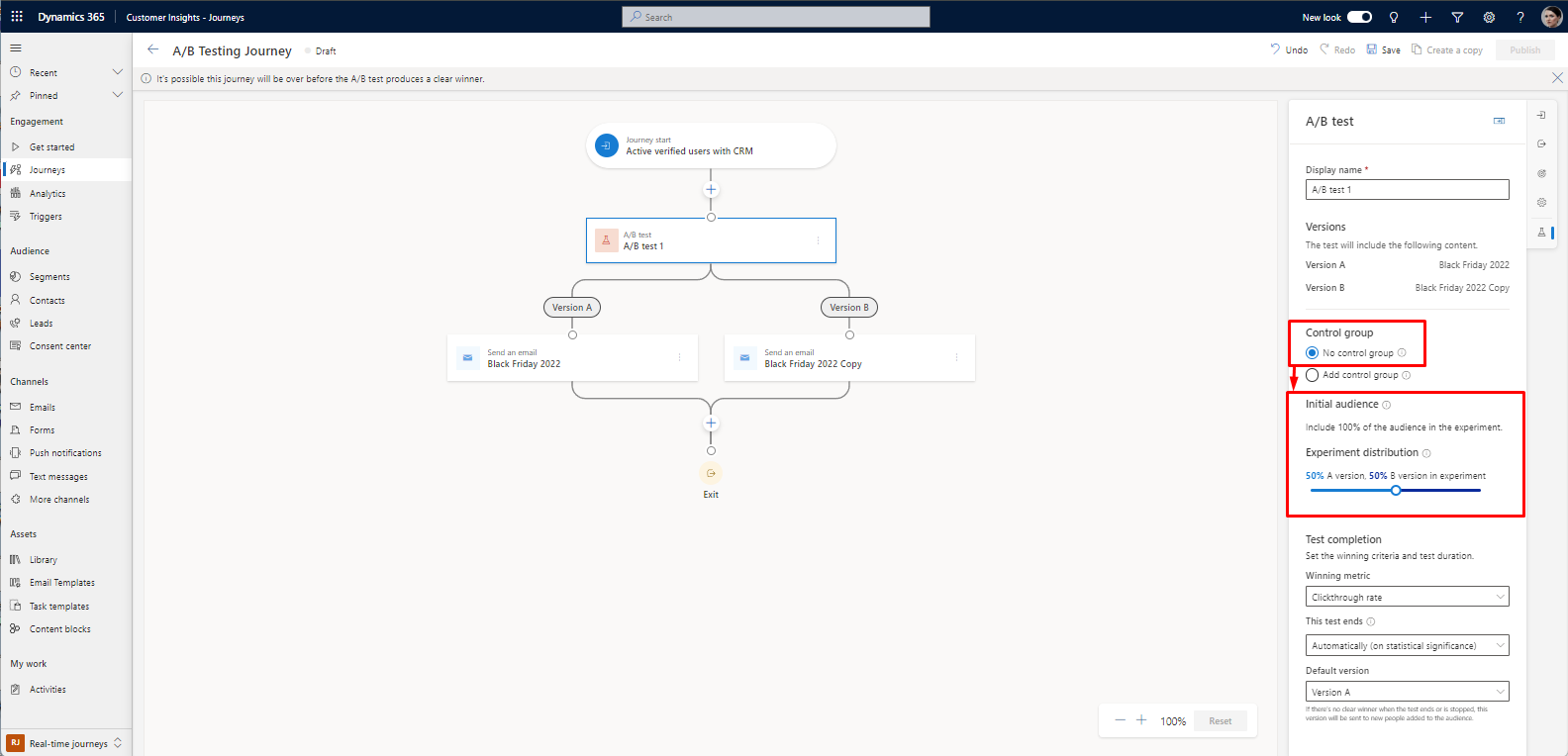

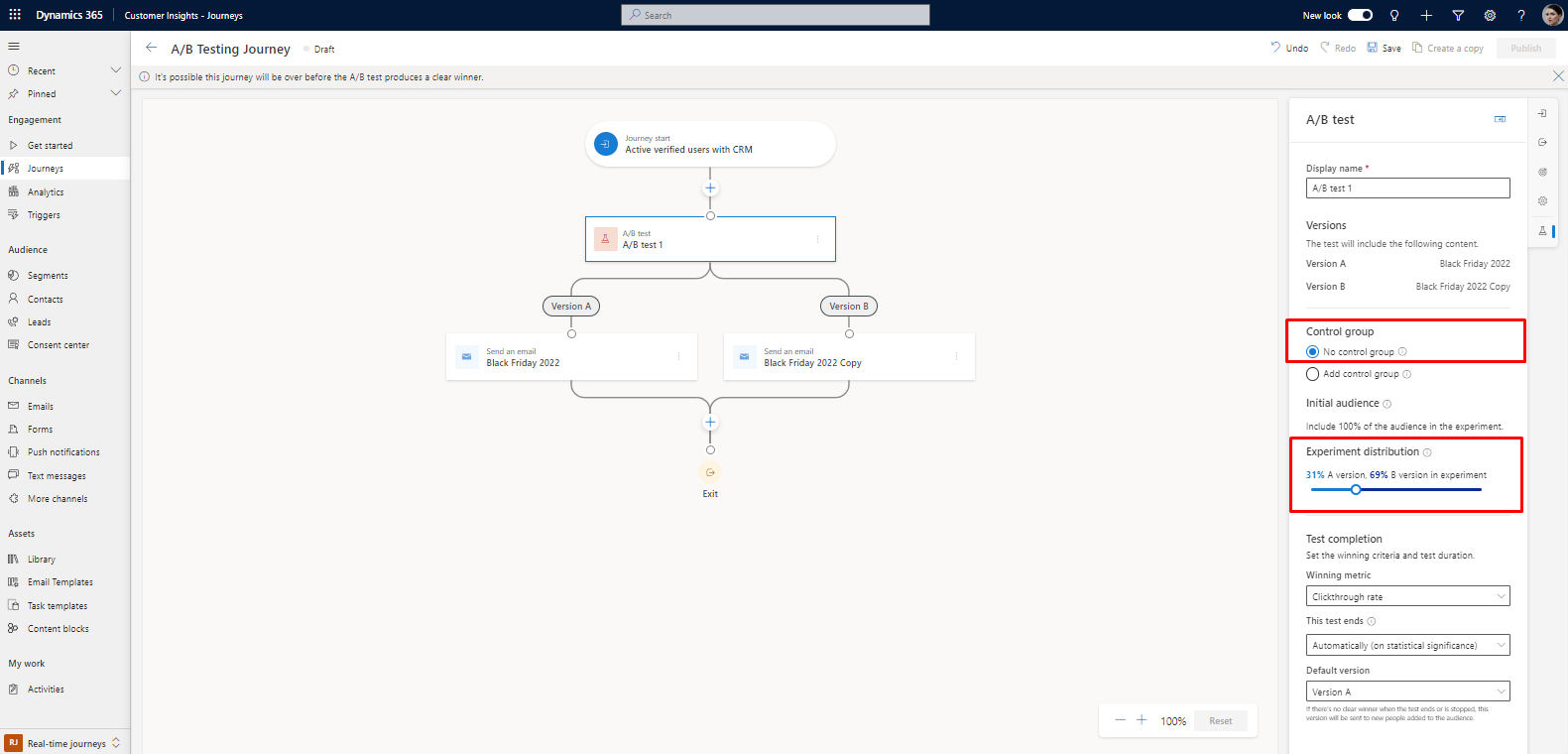

Type 1: A/B Test without control group:

This A/B test operates similarly to trigger-based journeys, wherein customers flow through the test organically until a winner is determined.

• Experiment Distribution: Choose your desired audience distribution. The slider defaults to 50-50 but can be set to any distribution between 10 and 90 percent. Traditionally, version A is the control group, and version B is the variant.

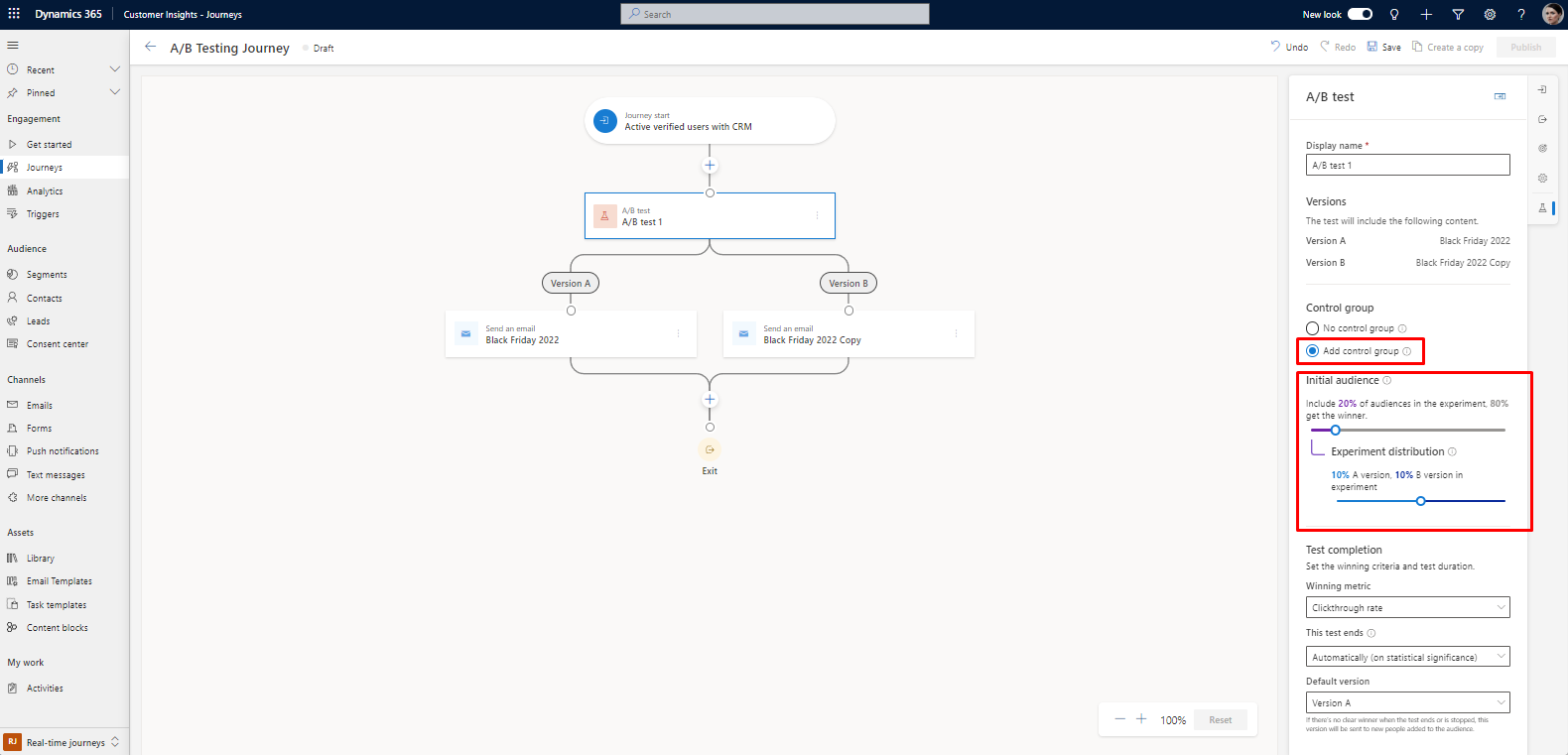

Type 2: A/B test with a control group:

This allows you to specify how many customers you want to test. For example, if your segment has 100 loyalty members, you can first test on 20 percent or 20 members, where each version will receive 10 members. After the A/B test, the remaining 80 members will receive the winning version. You can always adjust the initial audience and the distribution to your liking.

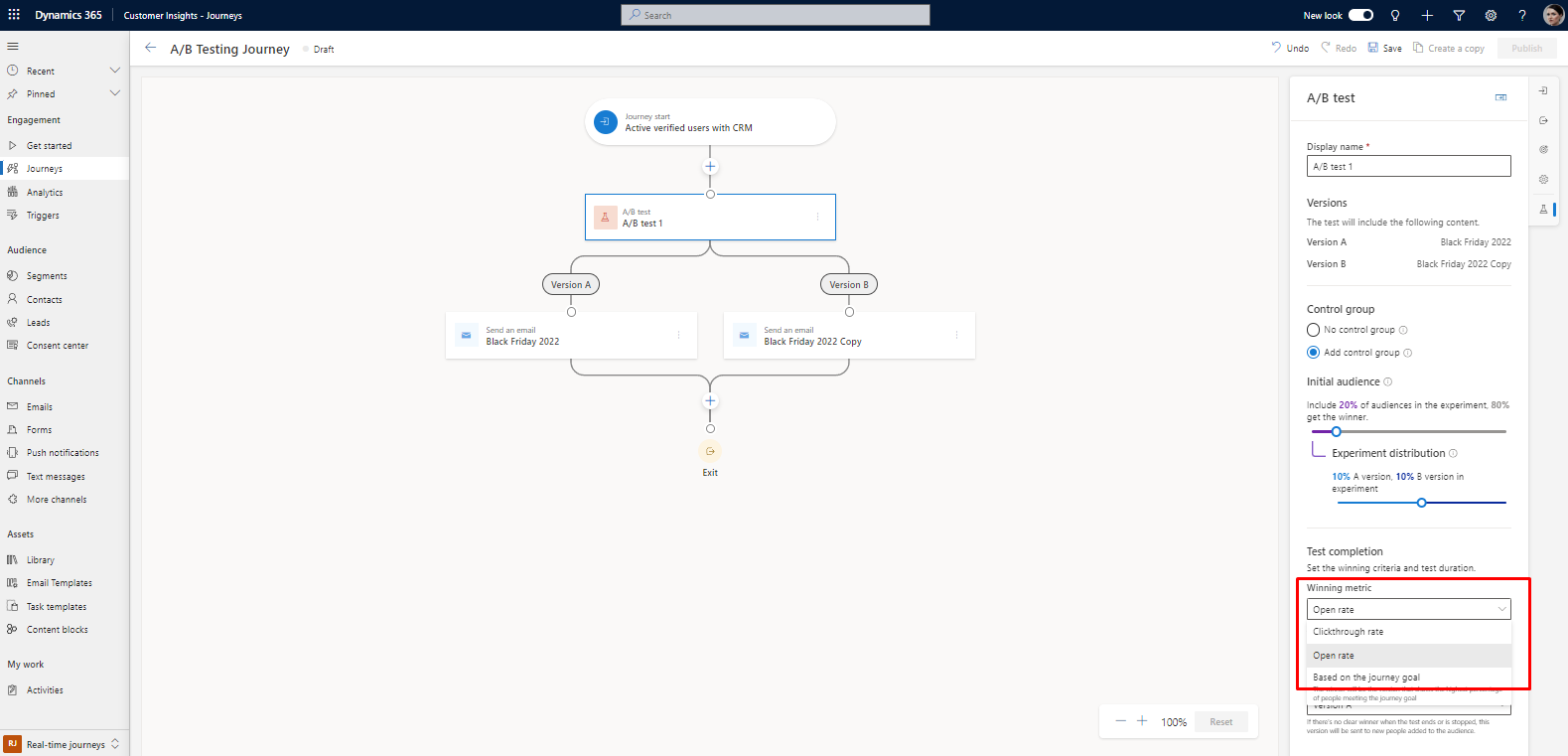

• Winning Metric: Set the winning condition by selecting a metric, such as the version with the most journey goal events, clicks, or opens. For instance, choose the open rate option to enhance open rates.

• This Test Ends: Choose to end the test automatically or at a specified date and time. If the results reach statistical significance (80-95 percent confidence), the system automatically sends the winning version. If there's no clear winner after 30 days, the default version is sent.

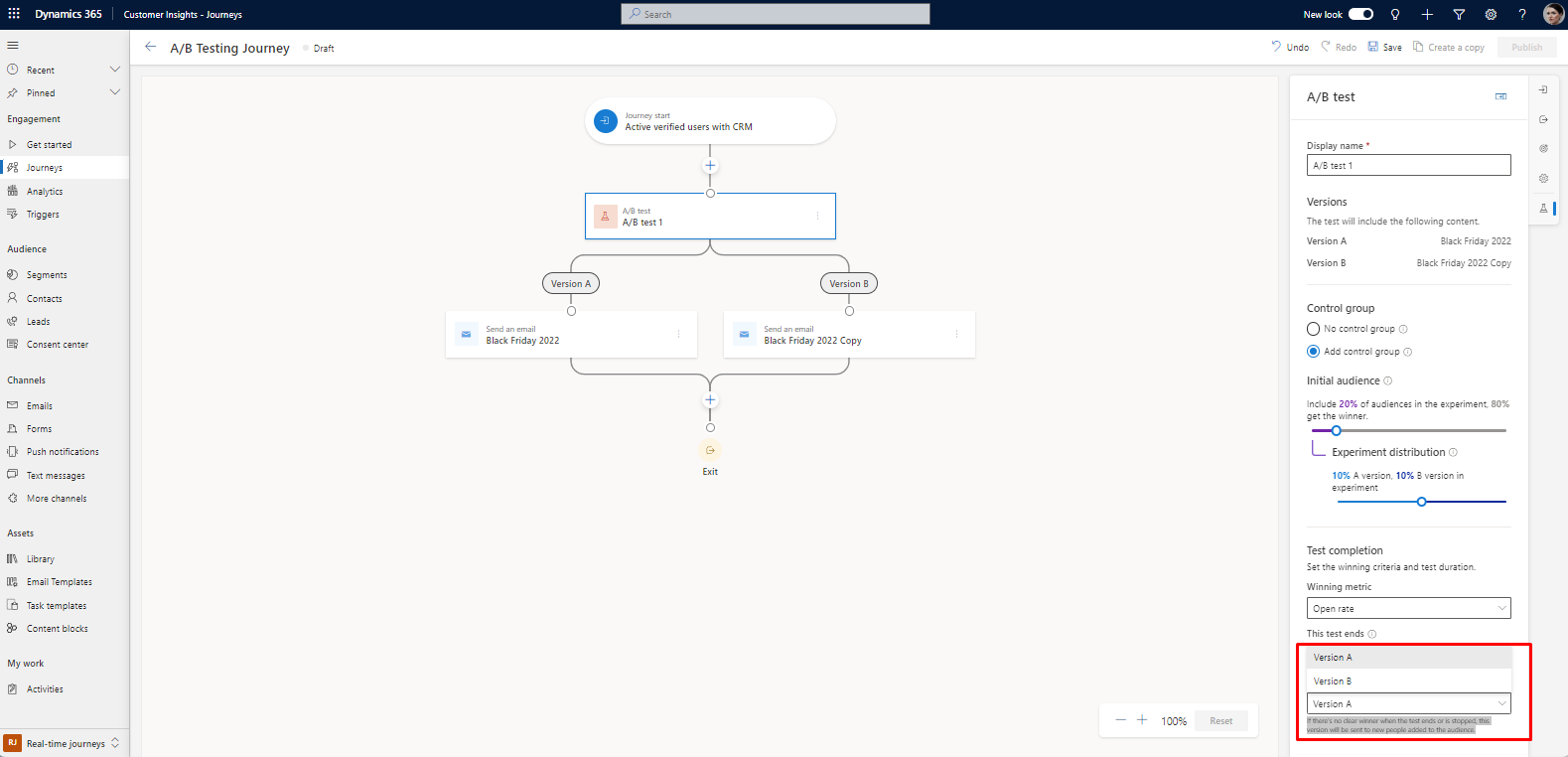

• Default Version: Select a default version if the test doesn't conclude successfully by the specified deadline. The default version is automatically sent if a winner is not determined within the specified date and time.

4. A/B Test Configuration:

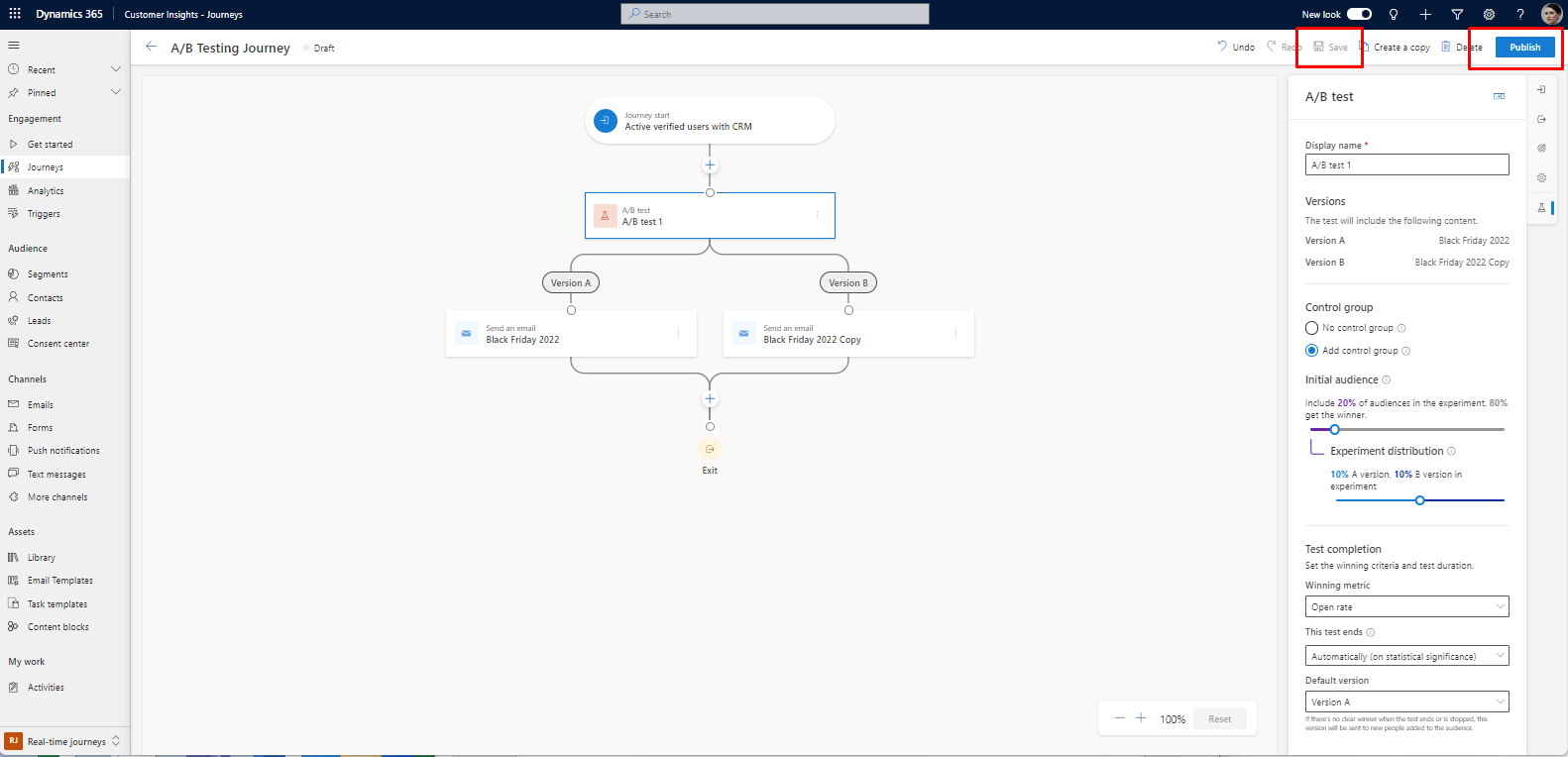

• Configure the A/B test side panel according to your preferences, ensuring that all elements are set before publishing the journey.

5. Monitoring A/B Test Lifecycle:

After publishing the journey, track the A/B test's lifecycle through different stages:

• Draft: These tests have not commenced, allowing you to edit the settings as needed.

• In Progress: Tests in this status are actively running. The settings are secured, and substantial changes cannot be made.

• Stopped: These tests have been halted, and the marketer has the option to choose which version to send.

• Ended: Tests in this category have concluded by identifying a statistically significant winner or reaching the scheduled end date and time. Tests that have ended cannot be reused.

There are three potential outcomes for A/B test results:

• Conclusive Winner: The test determines that one version outperforms the other. The winning version, distinguished by a "winner" badge, is distributed to new customers entering the journey.

• Inconclusive Test: The test results indicate that recipients are equally likely to engage with both version A and version B. Consequently, the default version is sent to new customers entering the journey.

• Test Stopped: If the test is halted prematurely by you or a colleague, the specified version is sent to new customers entering the journey.

Conclusion

A/B testing in segment-based journeys empowers marketers to refine their strategies based on real-time insights, enhancing overall campaign effectiveness and audience engagement. Regularly monitoring and interpreting A/B test results is crucial for making data-driven decisions and optimizing future marketing endeavors.

Don't forget to control your emails quality via test